Whatever your (philosophical) stance about what science is, there is wide agreement that experimentation is at the core of the modern scientific method. Carrying out the (same) experiments more than once is central to science in terms of validation and confirmation of the findings.

Moreover, the new paradigm for science based on data-intensive computing, named the fourth paradigm, opened a completely new way of making scientific discoveries where experimentation originated by computation is getting more and more prominent. We are today facing the so-called reproducibility crisis across all the areas of science, where researchers fail to reproduce and confirm previous experimental findings.

References & links

iDPP@CLEF 2022

iDPP@CLEF 2023

Faggioli, G., Guazzo, A., Marchesin, S., Menotti, L., Trescato, I., Aidos, H., Bergamaschi, R., Birolo, G., Cavalla, P., Chiò, A., Dagliati, A., de Carvalho, M., Di Nunzio, G. M., Fariselli, P., Garcia Dominguez, J. M., Gromicho, M., Longato, E., Madeira, S. C., Manera, U., Silvello, G., Tavazzi, E., Tavazzi, E., Vettoretti, M., Di Camillo, B., and Ferro, N. (2023). Intelligent Disease Progression Prediction: Overview of iDPP@CLEF 2023. In Experimental IR Meets Multilinguality, Multimodality, and Interaction. Proceedings of the Fourteenth International Conference of the CLEF Association (CLEF 2023), pages 343-369. Lecture Notes in Computer Science (LNCS) 14163, Springer, Heidelberg, Germany. doi: https://doi.org/10.1007/978-3-

Faggioli, G., Guazzo, A., Marchesin, S., Menotti, L., Trescato, I., Aidos, H., Bergamaschi, R., Birolo, G., Cavalla, P., Chiò, A., Dagliati, A., de Carvalho, M., Di Nunzio, G. M., Fariselli, P., Garcia Dominguez, J. M., Gromicho, M., Longato, E., Madeira, S. C., Manera, U., Silvello, G., Tavazzi, E., Tavazzi, E., Vettoretti, M., Di Camillo, B., and Ferro, N. (2023). Overview of iDPP@CLEF 2023: The Intelligent Disease Progression Prediction Challenge. In CLEF 2023 Working Notes, pages 1123-1164. CEUR Workshop Proceedings (CEUR-WS.org), ISSN 1613-0073. URL: https://ceur-ws.org/Vol-3497/

iDPP@CLEF 2024

Birolo, G., Bosoni, P., Faggioli, G., Aidos, H., Bergamaschi, R., Cavalla, P., Chiò, A., Dagliati, A., de Carvalho, M., Di Nunzio, G. M., Fariselli, P., Garcia Dominguez, J. M., Gromicho, M., Guazzo, A., Longato, E., Madeira, S., Manera, U., Marchesin, S., Menotti, L., Silvello, G., Tavazzi, E., Tavazzi, E., Trescato, I., Vettoretti, M., Di Camillo, B., and Ferro, N. (2024). Intelligent Disease Progression Prediction: Overview of iDPP@CLEF 2024. In Experimental IR Meets Multilinguality, Multimodality, and Interaction. Proceedings of the Fifteenth International Conference of the CLEF Association (CLEF 2024) – Part II, pages 118-139. Lecture Notes in Computer Science (LNCS) 14959, Springer, Heidelberg, Germany. doi: https://doi.org/10.1007/978-3-

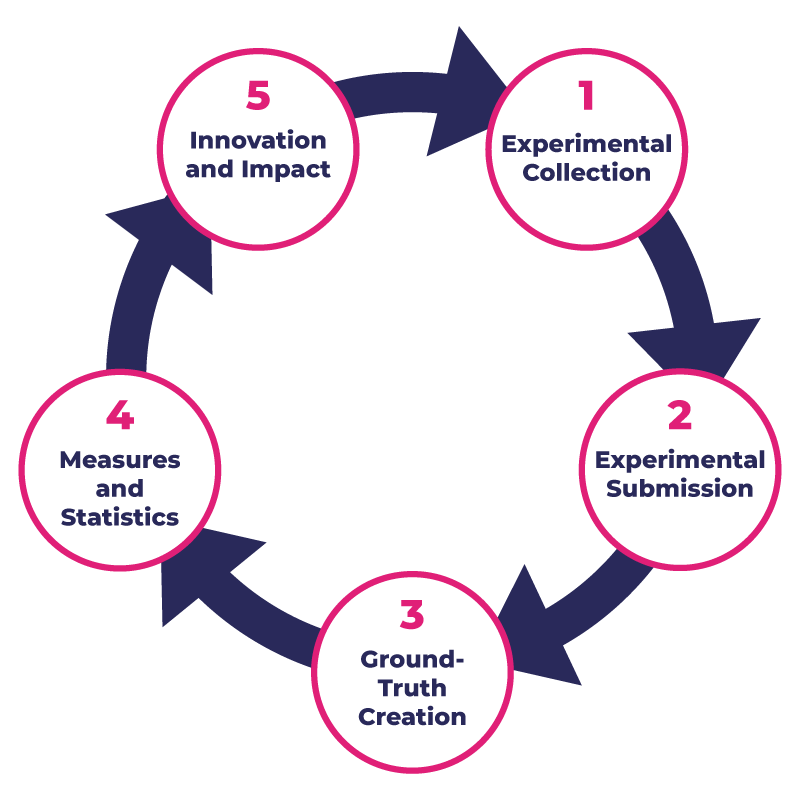

The open evaluation challenges organized by BRAINTEASER follow the workflow, shown in the figure, that guarantees their scientific soundness and thorough organization

Help foster predictive healthcare approaches and support clinicians, so patients with amyotrophic lateral sclerosis and multiple sclerosis experience healthier and more fulfilling lives

Leverage artificial intelligence to enhance clinical care and help design personalised health and care pathways

Adopt an open-science paradigm that makes scientific research results accessible, respect patient data confidentiality and ownership, and involve end users in the IT solution co-design to meet real needs constantly

1. STEP

Regards the creation of the experimental collections and consists of the acquisition and preparation of the datasets.

2. STEP

The participants test their systems and submit the output of their experiments.

3. STEP

The gathered experiments are used by the campaign organizers to create the ground-truth, typically adopting some appropriate sampling technique to select a subset of dataset for each topic. The datasets and ground-truth are then used to compute performance measures about each experiment.

4. STEP

Measurements are used to produce descriptive statistics and perform statistical tests in order to compare and understand the behaviour of different approaches and systems and to allow for their improvement. Then, these performance measurements and analyses serve to prepare reports about the experiments, the techniques they used and their findings.

5. STEP

In order to maximize the knowledge transfer and impact, the experimental data and findings are published together with their reports and made available for further exploitation and reuse; moreover, a public event is organized where the proposed approaches and their performances are presented, discussed, and compared in a live setting which facilitates the transfer of competencies and know-how among participants.

In the context of BRAINTEASER, this evaluation workflow acts as a catalyst for innovation and exploitation for three main reasons:

participants in evaluation activities are challenged to improve their own systems by addressing the evaluation tasks

the publicly available and huge experimental collections and experimental results are a durable asset that can be continuously exploited to improve systems, also outside BRAINTEASER itself;

the open access publication of the results and their analyses as well as the public event allow for a quick cross-fertilization where best-of-breed approaches can be picked up and applied to each own solutions and systems.

BRAINTEASER organizes its open evaluation challenges under the umbrella of the CLEF Initiative –

Conference and Labs of the Evaluation Forum, the internationally renowned campaign series whose main mission is to promote research, innovation, and development of information access systems with an emphasis on multilingual and multimodal information with various levels of structure.